While issuing measurements to isolate a failure on February 4, 2011, LIFEGUARD detected and isolated an outage between a source a George Mason University and a destination address in a Russian ISP named Smartkom. The following text explains the details of the outage (at an AS level for simplicity) and how LIFEGUARD isolated the failed link. A detailed diagram of measurements is located at the bottom of the page.

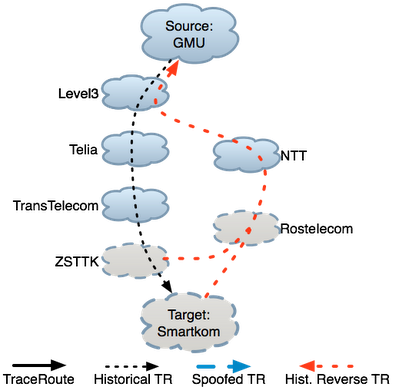

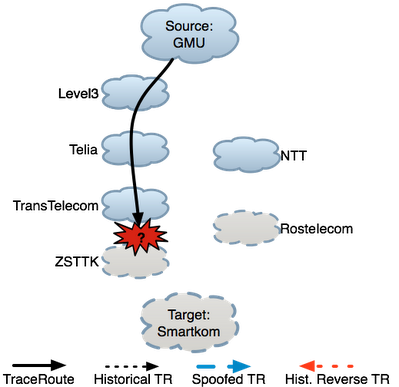

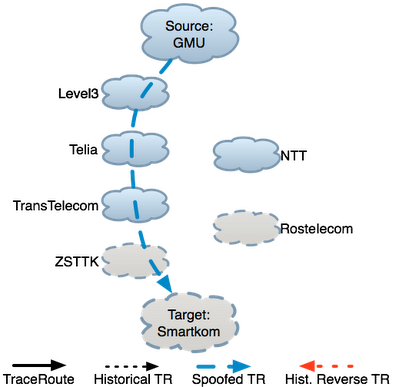

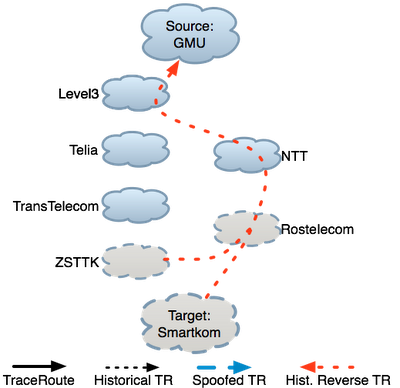

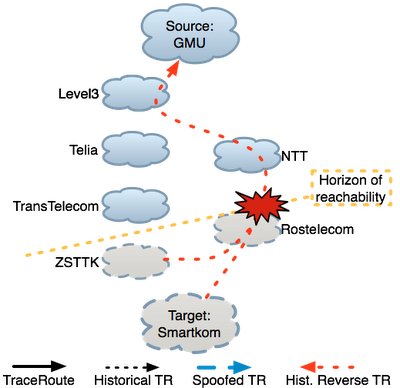

Maintain background atlas: In steady state, LIFEGUARD uses traceroute and reverse traceroute to regularly map the forward and reverse paths between its vantage points and the destinations it is monitoring. During failures, this path atlas yields both a view of what recent paths looked like before the failure, as well as a historical view of path changes over time. These paths provide likely candidates for failure locations and serve as the basis for some of the isolation measurements we discuss below. The figure to the right depicts historical forward and reverse traceroutes with the dotted black and red lines, respectively. Measure the path during an outage: During an outage, LIFEGUARD issues a traceroute to the destination, as is commonly done to determine the last responsive hop along a failed path. The solid black line in the figure depicts the traceroute from GMU during the failure. Traceroute can provide misleading information in the presence of failures. In this case, the last hop is a TransTelecom router, suggesting that the failure may be adjacent to this hop, between TransTelecom and ZSTTK. However, without further information operators cannot be sure, since the probes may have been dropped on the reverse paths back from hops beyond TransTelecom. Isolate direction of failure and measure working direction: LIFEGUARD tries to isolate the direction of the failure using spoofed pings. In the example, spoofed probes sent from GMU to Smartkom reached other vantage points, but no probes spoofed as GMU from other vantage points reached GMU, implying a reverse path failure. When the failure is unidirectional, LIFEGUARD measures the complete path in the working direction. Building on Hubble’s spoofed ping technique, LIFEGUARD uses spoofed TTL-limited probes to identify hops in the working direction while avoiding the failing direction. For a reverse failure, LIFEGUARD finds a vantage point with a working path back from D, then has S send a spoofed traceroute to D, spoofing as the working vantage point. In the example, GMU issued a spoofed traceroute towards the destination. The blue edges in the figure show the spoofed traceroute.It is useful to measure the working direction of the path for two reasons. First, since the path is likely a valid policy-compliant path in the failing direction, it may provide a working alternative for avoiding the failure. Second, knowledge of the path in the working direction can guide isolation of the problem in the failing direction, as we discuss below. Test atlas paths in failing direction: Once it has measured the working path, LIFEGUARD measures the responsive portion of the path. For forward and bidirectional failures, the source can simply issue a traceroute towards the destination. For reverse failures, LIFEGUARD cannot measure a reverse traceroute from D, as such a measurement requires a response from D to determine the initial hops. Instead, LIFEGUARD has its vantage points (including S) ping:

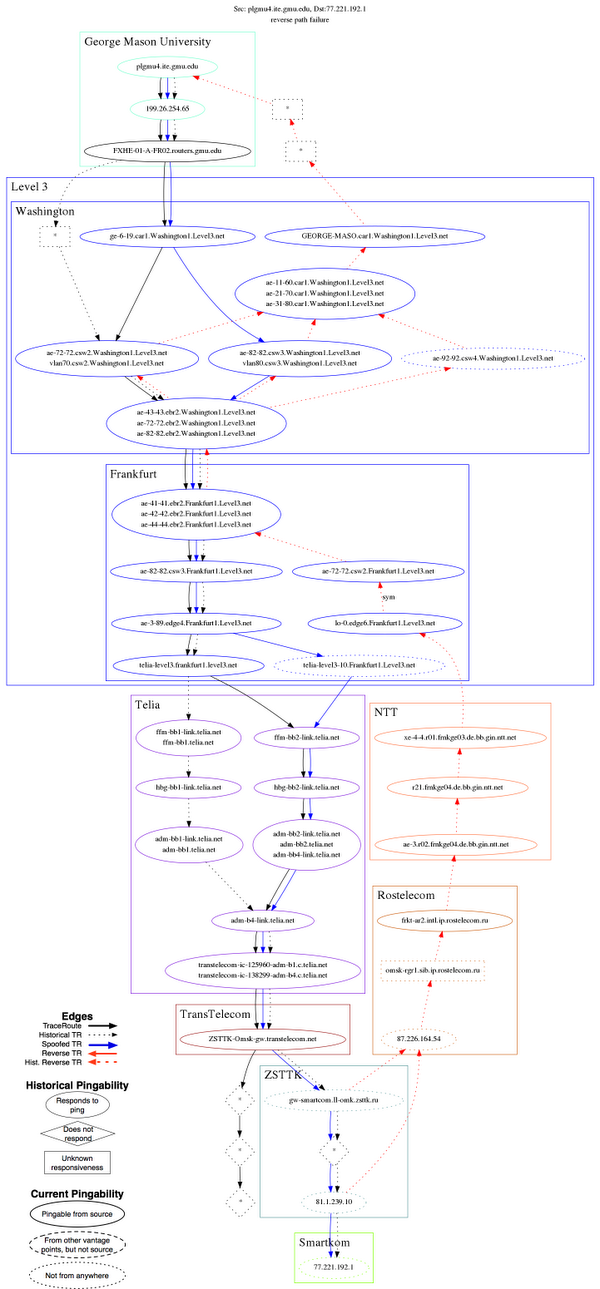

Prune candidate failure locations: Finally, LIFEGUARD removes any reachable hops from the suspect set, and applies heuristics to identify the responsible AS within the remaining suspects. For forward outages, the failure is likely between the last responsive hop in a traceroute and the next hop along the path towards the destination. LIFEGUARD’s historical atlas often contains paths through the last hop, providing hints about where it is trying to route. For a reverse failure, LIFEGUARD considers reverse paths from D back to S that are in its atlas prior to the failure. For the most recent path, it determines the farthest hop H along that path that can still reach S, as well as the first hop H’ past H that cannot. Given that H’ no longer has a working path to S, contacting the AS containing H’ or rerouting around it may resolve the problem. Because both historical reverse paths from unresponsive forward hops traversed Rostelecom, it seems highly likely this is the point of failure. This conclusion is further supported by the fact that all unreachable hops except Rostelecom responded to pings from other vantage points, indicating that their other outgoing paths still worked.After isolating the outage, LIFEGUARD automatically generated the figure below, depicting the measurements the system issued to locate the failure. Click on the figure to see the full size image (and click this link for a legend explaining the nodes and edges). At the top of the figure is plgmu4.ite.gmu.edu, one of LIFEGUARD’s vantage points at George Mason University. The destination at the bottom, 77.221.192.1 belonging to Smartkom (ASN 42676) in Russia, became unreachable from the source. Each node is a router, and the arrows use the same styles as described above.

|